StrangVR Things

Class: Experimental TV Studio

Advisor: Dr. Janet Murray

Overview

A VR Prototype set in the universe of "Stranger Things". Our team worked on extending the experience of the TV Show by providing off-screen scenes as VR experiences, allowing the user to go through a piece of the story (Eleven's escape from the lab) that is not explicitly shown on screen.

My Role

Leveraging the 'VRTK' Unity package, I designed and developed the telekinesis system for the HTC Vive controller, developed the user locomotion mechanics, and the monster animation/interactions.

Technical Highlights

Phase One

Research & Discovery

We started our research by learning VR experience conventions, both from a design and technical standpoint. We learned about design principles by reading about VR Design and using VR devices like Google Cardboard, Gear VR, Oculus and Vive. Based on my proposal,we decided to make an experience for "Stranger Things", as we thought the storyline would lend itself to explore interesting mechanics in VR (i.e. Telekinesis, parallel universes, and the unease about being in the same environment as it's monster). After additional brainstorming, we decided to create an experience with a narrative set in the show's world with specific tasks for the user to accomplish.

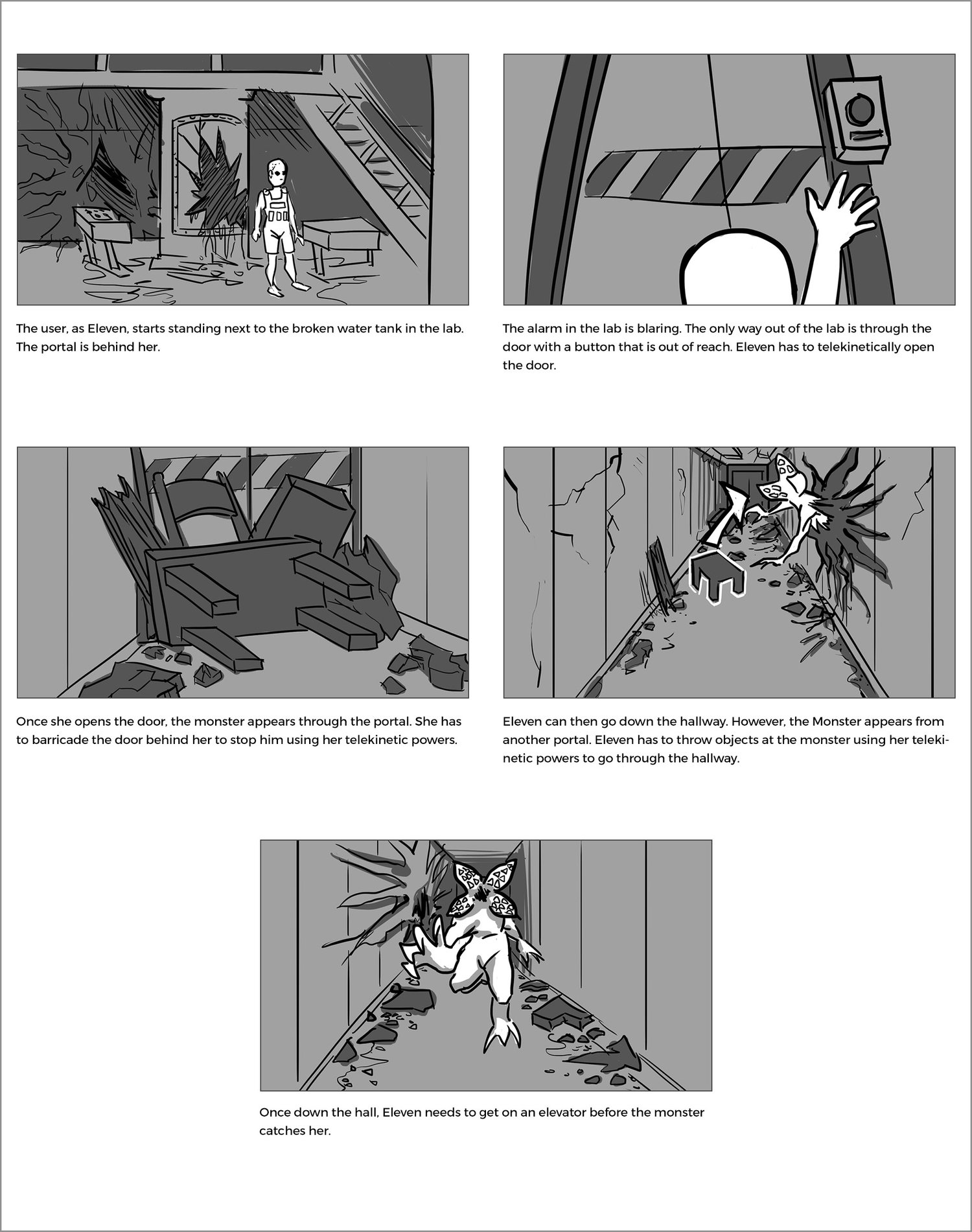

Storyboard

Telekinesis

The first major technical task I undertook was developing the Telekinesis functionality with the Vive controllers. I leveraged the 'simple pointer' example from the VRTK Unity package and started by creating a system where users would point the controller at the object they wanted to pick up, press the thumb-pad button, and make the object a child of the controller (thus transferring the controller movement to the object). The user can pick up objects from afar by using raycasts to capture the object's information when pointed at. However, this parent/child system caused the object movement to be too rigid. I wanted to create the feeling that the object was 'floating'.

Instead of parenting the object to the controller directly, I decided to implement a spring joint system which sacrificed a little control but created that 'floating' feeling I was targeting. I also wanted to add more visual feedback so I spawn a particle emitter at the point the raycast hits the object. I tested the Telekinesis system out on myself and group members. After some practice, they were able to use it effectively. The video on the right shows the spring joint Telekinesis system.

Testing & Feedback

We had an opportunity to test our prototype with a variety of users at GA Tech's GVU Demo Day, in which the college opens it's doors for anyone to come discover current research projects. Seeing users going through our experience allowed us to learn a lot about what was working and what wasn't working in our current prototype. Users enjoyed the telekinesis and enjoyed the general aspects that Room Scale VR bring (body movement equals digital movement). We also uncovered some design issues; including problems with how to communicate directions, some motion sickness with our locomotion solution, and how to attract the user's attention to important elements. Based on feedback, we came up with solutions to some of the issues we encountered while further refining elements of the prototype.

Phase Two

Refining the Environment

In order to iterate on the prototype, we used information that we had gathered when making and testing the rough prototype. Our largest problem centered around locomotion: the room extender was difficult to explain and understand, and the ability to toggle it on and off confused people. We decided to make it's use mandatory to plan the level size more easily, and got rid of the hallway part of the experience. We re-designed the interactions to all happen within the laboratory, and have the elevator arrive directly inside it. We also simplified the interaction to be more in scope with what we could accomplish in the semester.

Monster Interactions

While my group members re-scaled the environment, I designed and developed the monster interactions. We found a pre-built monster from the Asset Store but not the actual model from the show. Due to the fact that the experience was now in one room, we needed a system to keep the monster at bay without forcing the user to move around too much. What I decided to do was create 3 spawn points for the monster around the room. When the user throws a gas canister and successfully hits the monster, it re-spawns randomly at one of those 3 points. We chose gas canisters/explosions because the fire seemed to be a point of weakness for the monster in the show. We also place boxes in the play area which stun the monster when hit. I programmed the monster's animation states to change based on collisions and always revert back to walking towards the user's current position. However we did not implement any AI that would allow the monster to walk around objects in the room.

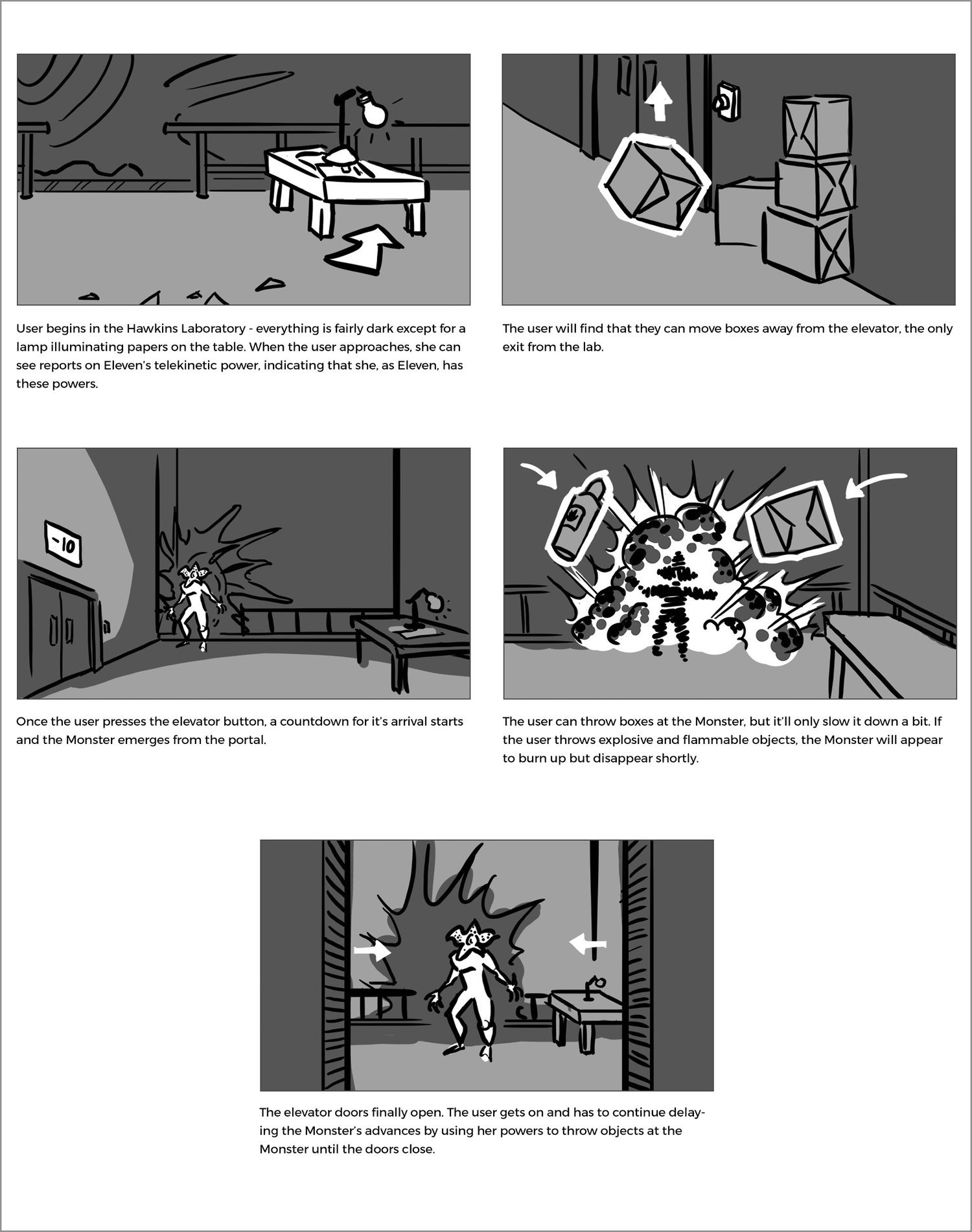

Second Storyboard

Phase Three

Final Prototype

In our final prototype, we managed to implement all the interactions, re-scale the room, and polish the environment aesthetics. My teammate, Annick, modeled and textured the environment assets and I worked on the environment lighting.

The story beats of the whole experience are as follows:

- The user 'wakes up' in a destroyed laboratory.

- The user is given time to explore the space and discover their telekinesis power.

- In order to escape, the user must call the elevator via button next to the door. Once the button is pressed, the countdown starts and the monster emerges from the portal.

- Using boxes and gas canisters, the user must keep the monster at bay until the elevator doors open.

- Once inside, the user must push another elevator button to end the experience.

Final Testing & Feedback

At the end of the semester, we were able to test the final prototype at Professor Janet Murray's research projects presentation. We received a lot of feedback, mostly good and some things we needed to improve. A majority of the pain points were around the following:

- locomotion: what is the best way for users to move around? We need to consider that their movement is limited by the physical playspace of the Vive setup.

- focus: how do we get the user to pay attention to the element that matters? Rules of composition depend heavily on the picture frame, visual design for VR is a whole new beast. Users can choose to look in the direction they want, we need to find how to direct their gaze without a frame.

- learning: how do we teach the user how to do what they need to do? The telekinesis power is not natural on top of the fact that the VR medium is new. A lot of people had issues initially figuring out how to use their powers and then know how to use them on the monster.